Deploying and Testing Machine Learning Applications in Kubernetes with Gradio

How can Gradio be utilized to facilitate the deployment and testing of Machine Learning Applications within a Kubernetes environment, ensuring user-friendly interaction and efficient utilization of resources? What benefits does using Gradio in conjunction with Kubernetes offer when deploying and testing diverse Machine Learning Applications, and how does it streamline the process for developers and end-users alike?

NOTE: All the code / examples used in this blog post are available in a GitHub repository. Check this out!

1. Gradio

A highly effective approach for showcasing your machine learning model, API, or data science workflow to others involves developing an interactive application that enables users or peers to experiment with the demo directly through their web browsers.

Gradio, a Python library, offers a streamlined solution for constructing such demos and facilitating easy sharing. In many cases, achieving this only requires a concise snippet of code.

1.1 Gradio and Kubernetes: the perfect match!

When coupled with Kubernetes, this approach gains additional advantages. Kubernetes provides a robust orchestration platform that enables seamless deployment, management, and scaling of containerized applications. By combining Gradio’s interactive demos with Kubernetes, you harness the power of containerization, making it easier to package and distribute your machine learning applications consistently across various environments.

The benefits of using Kubernetes alongside Gradio include:

- Scalability: Kubernetes allows your interactive demos built with Gradio to be easily scaled up or down based on demand. This ensures that as more users interact with your demos, the underlying infrastructure can handle the load efficiently.

- Resource Efficiency: Kubernetes optimizes resource utilization, ensuring that your demos running on Gradio are allocated the right amount of computational resources. This prevents overutilization or underutilization, leading to cost savings and better performance.

- High Availability: Kubernetes provides features like automatic load balancing and failover, which enhance the availability of your demos. This means that even if one instance fails, others will seamlessly take over, minimizing downtime.

- Monitoring and Management: Kubernetes offers robust monitoring and management tools. You can easily track the performance of your interactive demos, gather metrics, and troubleshoot any issues that arise.

- Consistency: Kubernetes ensures consistency across different deployment environments. Your Gradio-based demos will behave consistently whether they are running on your local machine, a development server, or a production cluster.

- Easy Updates and Rollbacks: Kubernetes facilitates smooth updates and rollbacks of your demos. This is crucial when you want to introduce new features or fixes without disrupting user interactions.

In summary, combining Gradio with Kubernetes not only allows you to create engaging interactive demos but also guarantees efficient deployment, scaling, monitoring, and management of these demos across different environments. This synergy empowers you to share your machine learning applications effectively and ensure a positive user experience.

2. Install K8s Cluster using KIND

Kind (Kubernetes in Docker) is a tool that allows you to create local Kubernetes clusters using Docker containers. It provides an environment to run Kubernetes clusters for development, testing, and experimentation purposes.

The benefits of using Kind include easy setup and teardown of clusters, fast cluster creation, and the ability to simulate multi-node Kubernetes clusters on a single machine. It helps streamline the development and testing workflow by providing a lightweight and isolated environment that closely resembles a production Kubernetes cluster.

- Install Docker and ensure that the Docker service is enabled and running:

sudo dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

sudo dnf install docker-ce --nobest

sudo systemctl enable --now docker

NOTE: also Podman can be used, but for certain parts of this blog post, Docker worked out of the box without further tweaks in KIND.

- Create a Kind K8s cluster to deploy Seldon Core:

CLUSTER_NAME="k8s"

cat <<EOF | kind create cluster --name $CLUSTER_NAME --wait 200s --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

EOF

kubectl cluster-info --context kind-k8s

3. Installing K8s Ingress Controller

Kubernetes Ingress is crucial for managing external access to services within a cluster, providing routing and load balancing capabilities. Nginx Ingress, as a popular Ingress controller, enables seamless traffic distribution, SSL termination, and routing based on hostnames or paths, enhancing scalability and security.

- Install the Ingress Nginx adapted for Kind:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/kind/deploy.yaml

The provided command installs Nginx Ingress, extending Kubernetes functionality by efficiently directing incoming external requests to appropriate services using defined rules and configurations. This optimizes resource utilization and simplifies external connectivity management.

4. Visual Recognition Machine Learning model using MobileNetV2

MobileNetV2 is a convolutional neural network architecture that seeks to perform well on mobile devices. It is based on an inverted residual structure where the residual connections are between the bottleneck layers. The intermediate expansion layer uses lightweight depthwise convolutions to filter features as a source of non-linearity. As a whole, the architecture of MobileNetV2 contains the initial fully convolution layer with 32 filters, followed by 19 residual bottleneck layers.

You have more information around MobileNetv2 in the official paper released by Sandler et al.

- Let’s deep dive a bit in the ML App code!

import requests

import tensorflow as tf

import gradio as gr

# load the model

mobile_net = tf.keras.applications.MobileNetV2()

# Download human-readable labels for ImageNet.

response = requests.get("https://git.io/JJkYN")

labels = response.text.split("\n")

# Define a function classify_image(inp) that preprocesses input image, performs prediction using

# inception_net, and returns a dictionary of class labels with corresponding probabilities.

def classify_image(input_images):

input_images = input_images.reshape((-1, 224, 224, 3))

input_images = tf.keras.applications.mobilenet_v2.preprocess_input(input_images)

prediction = mobile_net.predict(input_images).flatten()

return {labels[i]: float(prediction[i]) for i in range(1000)}

This code demonstrates the creation of an image classification system using TensorFlow and Gradio. It starts by importing necessary libraries: requests for HTTP requests, tensorflow for machine learning, and gradio for creating a user interface.

The MobileNetV2 model is loaded from tf.keras.applications and initialized. This model is a deep neural network pre-trained on a large dataset and capable of classifying images into numerous categories.

The code then fetches human-readable class labels for the ImageNet dataset, which contains over a thousand different object categories. These labels will be used to interpret the model’s predictions.

The classify_image function is defined to classify input images. It takes raw image data, reshapes it, and preprocesses using MobileNetV2’s preprocessing function. The model then predicts the class probabilities for each image, and the results are flattened into a list.

The function returns a dictionary containing class labels and their corresponding probabilities, with the keys being the class labels and the values being the prediction probabilities.

5. Integrating Gradio for deploying the Machine Learning App

- Let’s integrate Gradio python library to deploy our deep learning image classification model in an easy and visual way!

# Define a run function that sets up an image and label for classification using the gr.Interface.

def run():

image = gr.Image(shape=(224, 224))

label = gr.Label(num_top_classes=4)

title = "Rcarrata's Image Classification Example"

demo = gr.Interface(

fn=classify_image, inputs=image, outputs=label, interpretation="default", title=title

)

demo.launch(server_name="0.0.0.0", server_port=7860)

The Gradio library is utilized to set up a web-based interface for the image classification system. Users can upload images through this interface, and the classify_image function processes them using the MobileNetV2 model.

The uploaded images are fed into the classify_image function, and the predictions are generated. The interface then displays the class labels along with their respective probabilities, allowing users to understand the model’s assessment of the uploaded images.

By integrating Gradio, this code enables easy interaction with the image classification model without requiring users to write code. It provides an accessible way for individuals to explore how the model categorizes different images and assesses its confidence in those classifications.

6. Containerizing the ML App

Now it’s time to containerizing our Machine Learning Image Classification into a Container Image in order to deploy it into k8s / OpenShift.

- Essentially it’s the Containerfile it’s like another Python app, so it’s quite straightforward:

FROM python:3.9

WORKDIR /app

COPY ./requirements.txt /app/requirements.txt

RUN pip install --no-cache-dir -r /app/requirements.txt

COPY main.py /app

EXPOSE 7860

CMD ["python", "main.py"]

- We will use a Makefile that it’s a wrapper of docker/podman build, tag and push to the Quay.io:

make all

And voilà, we have our brand new ML Visual Classification App container Image stored in Quay.io ready to be deployed!

NOTE: remember that it’s a PoC and this Dockerfile can be improved in several ways! Use best practices!!

7. Deploying our ML Container Image into K8s

Now that we have our ML App Container Image ready to be deployed, let’s try it!

The app it’s composed by a standard k8s Deployment, a K8s Service and the K8s Ingress.

To deploy the manifests in our K8s KIND server, we will use kustomize:

kubectl apply -k manifests/overlays/

- After a while, we can check the pod running:

kubectl get pod -n gradioapp

NAME READY STATUS RESTARTS AGE

gradioapp-7fcf59fcb8-rw9pg 1/1 Running 0 22s

- If we check the Ingress our app it’s exposed in the Port 80 using the Nginx Ingress:

kubectl get ingress -n gradioapp

NAME CLASS HOSTS ADDRESS PORTS AGE

gradioapp-ingress <none> * localhost 80 110s

Testing our Gradio App code with some examples

Now that it’s deployed, let’s having fun with our app!

- If we check the web browser localhost:80 app (I’m my case I deployed the KIND server in an external NUC server), we can see our brand new Gradio App:

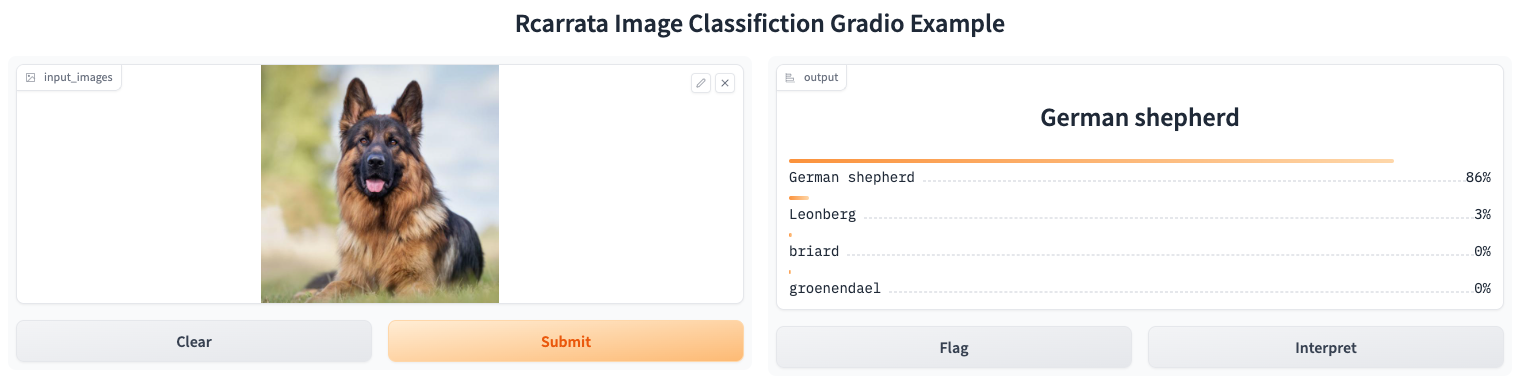

Let’s tested with a couple of examples!

First let’s use an image of a German Shepperd (my favourite dog):

Pretty cool huh? In a very few seconds and with a relative small Machine Learning model, we identified with an 86% of accuracy that is a German Shepperd!

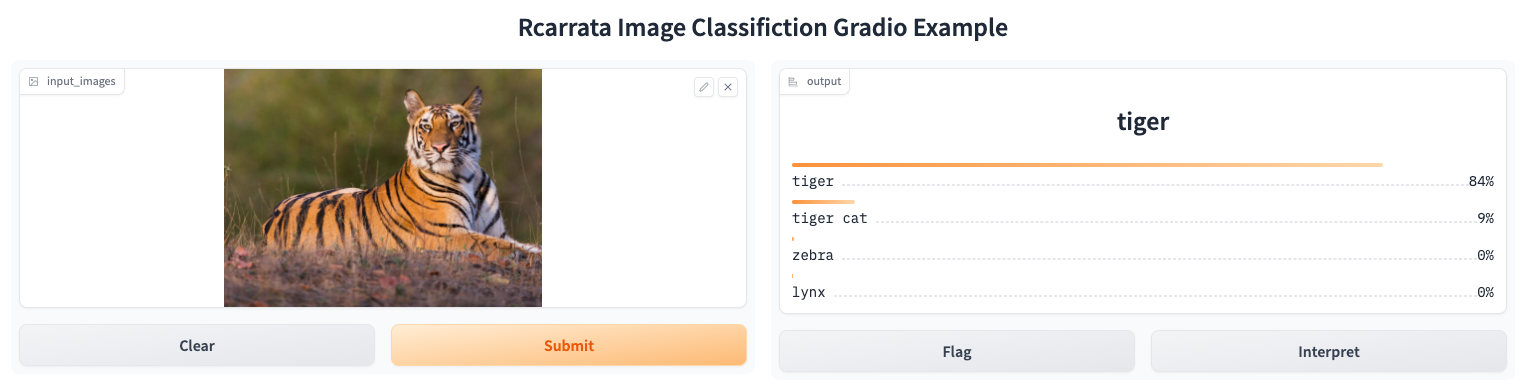

Let’s try to add another animal such is a Tiger:

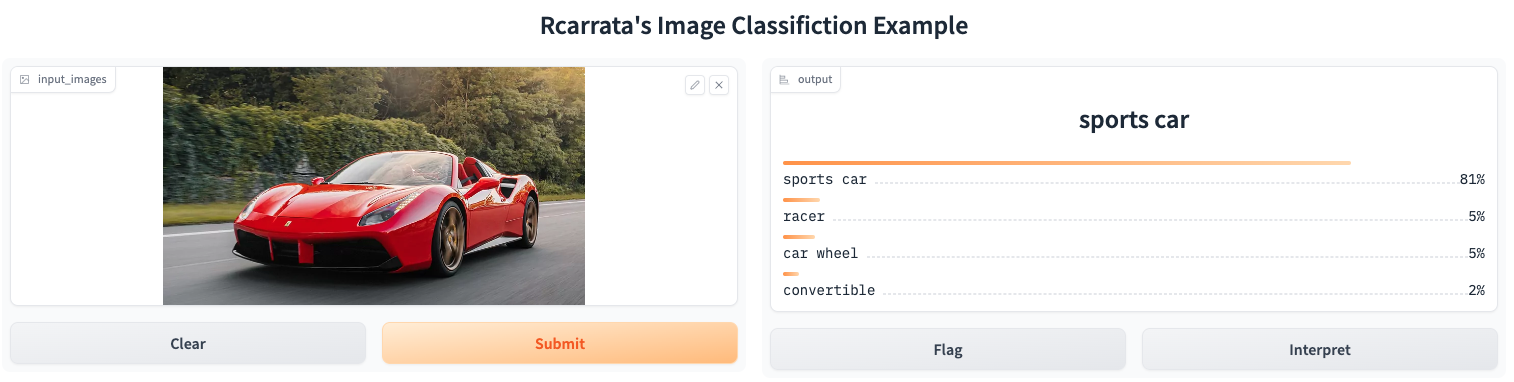

And if we switch to a “thing”, like a fancy car (Ferrari), what will happens?

Also pretty accurated!

8. Interacting with our App Api using Gradio Client libraries

Another way to interact with our ML App it’s using Gradio Client, a python library to handle requests to the Gradio Apps.

I’ve written a small python program to handle requests to the Gradio App deployed in k8s:

python test_app.py --url http://192.168.3.3 --image ./assets/tiger.jpeg

Loaded as API: http://192.168.3.3/ ✔

/var/folders/gc/9v6_6d8s2q51clcgwz747j2m0000gn/T/gradio/tmpcu35x_x8.json

We received the result of our requested prediction inference almost instantly from our App. If we check the results stored in json, we can see the exact same results when we interacted with the Browser:

cat /var/folders/gc/9v6_6d8s2q51clcgwz747j2m0000gn/T/gradio/tmpcu35x_x8.json | jq -r .

{

"label": "tiger",

"confidences": [

{

"label": "tiger",

"confidence": 0.8418528437614441

},

{

"label": "tiger cat",

"confidence": 0.08996598422527313

},

{

"label": "zebra",

"confidence": 0.003402276895940304

},

{

"label": "lynx",

"confidence": 0.0014183077728375793

}

]

}

And with that ends the third blog post around Deploying and Testing Machine Learning Applications in Kubernetes with Gradio.

NOTE: Opinions expressed in this blog are my own and do not necessarily reflect that of the company I work for.

Happy MLOpsing!